You’re definitely right that image processing AI does not work in a linear manner like how text processing does, but the training and inferences are similarly fuzzy and prone to false positives and negatives. (An early AI model incorrectly identified dogs as wolves because they saw a white background and assumed that that was where wolves would be.) And unless the model starts and stays perfect, you need well-trained doctors to fix it, which apparently the model discourages.

If you think a word predictor is the same as a trained medical professional, I am so sorry for you…

Ironic. If only you had read a couple more sentences, you could have proven the naysayers wrong, and unleashed a never-before-seen unbiased AI on the world.

It’s nice to see articles that push back against the myth of AI superintelligence. A lot of people who brand themselves as “AI safety experts” preach this ideology as if it is a guaranteed fact. I’ve never seen any of them talk about real life present issues with AI, though.

(The superintelligence myth is a promotion strategy; OpenAI and Anthropic both lean into it because they know it boosts their stocks.)

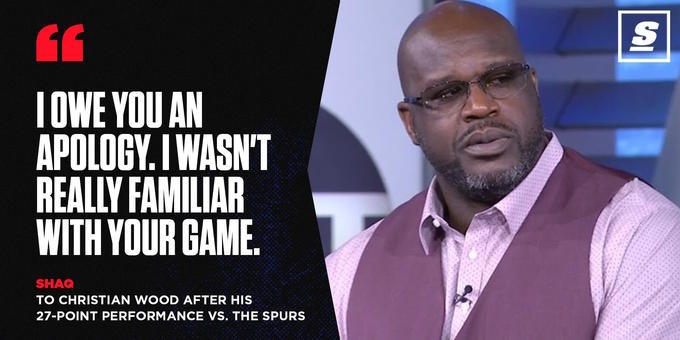

In the case of Moldbook or FaceClaw or whatever they’re calling it, a lot of the AGI talk is sillier than ever, frankly. Many people who register their bots have become entirely lost in their own sauce, convinced that because their bots are speaking in the first person, that they’ve somehow come alive:

It’s embarrassing, really. People promoting the industry have every incentive to exaggerate their claims on Twitter for the revenue, but some of them are starting to buy into it.

The conservative belief is that children are basically property and as such can be used for hard labor and kept from appropriate healthcare… But then when it comes to porn, Big Government has to do everything for them.

Nobody ever said it was a consistent ideology.

It’s optional; you can also just use your standard username and password.

Calculators are programmed to respond deterministically to math questions. You don’t have to feed them a library of math questions and answers for them to function. You don’t have to worry about wrong answers poisoning that data.

On the contrary, LLMs are simply word predictors, and as such, you can poison them with bad data, such as accidental or intentional bias or errors. In other words, that study points to the first step in a vicious negative cycle that we don’t want to occur.

Even if we narrowed the scope of training data exclusively to professionals, we would have issues with, for example, racial bias. Doctors underprescribe pain medications to black people because of prevalent myths that they are more tolerant to pain. If you feed that kind of data into an AI, it will absorb the unconscious racism of the doctors.

And that’s in a best case scenario that’s technically impossible. To get AI to even produce readable text, we have to feed a ton of data that cannot be screened by the people pumping it in. (AI “art” has a similar problem: When people say they trained AI on only their images, you can bet they just slapped a layer of extra data on top of something that other people already created.) So yeah, we do get extra biases regardless.

1/2: You still haven’t accounted for bias.

First and foremost: if you think you’ve solved the bias problem, please demonstrate it. This is your golden opportunity to shine where multi-billion dollar tech companies have failed.

And no, “don’t use Reddit” isn’t sufficient.

3. You seem to be very selectively knowledgeable about AI, for example:

If [doctors] were really worse, fuck them for relying on AI

We know AI tricks people into thinking they’re more efficient when they’re less efficient. It erodes critical thinking skills.

And that’s without touching on AI psychosis.

You can’t dismiss the results you don’t like, just because you don’t like them.

4. We both know the medical field is for profit. It’s a wild leap to assume AI will magically not be, even if it fulfills all the other things you assumed up until this point, and ignore every issue I’ve raised.

But an LLM properly trained on sufficient patient data metrics and outcomes in the hands of a decent doctor can cut through bias

II think Microsoft is in a position where they can grab all of OpenAI’s assets without giving them (more) money, if I understand the deal right

Even if AI works correctly, I don’t see responsible use of it happening, though. I already say nightmarish vertical video footage of doctors checking ChatGPT for answers…

Edit: great talk, good to know the AI true believers can handle discussion of reality

Resharing this to BuyEuropean communities

Is that seriously an “AI is like a child” poster made to motivate workers?

AI companies sure love to treat humans like machines, while humanizing machines.

I don’t think the QR code has anything to do with encryption, though. They’re just trying to make it convenient to sign in.

Meanwhile, Matrix has a lot of extra stuff going on behind the scenes.